How to become a correlation wizard with the brightwind library!¶

[1]:

import datetime

print('Last updated: {}'.format(datetime.date.today().strftime('%d %B, %Y')))

Last updated: 05 July, 2019

Outline:¶

This guide will demonstrate various techniques to correlate a sample site dataset to 4 separate reference datasets using the following steps:

Import the brightwind library, load some sample raw data and clean it

Import 4 sample reference datasets to use in the correlation

Perform a ordinary least squares correlation for 1 dataset and time period

Apply a long term adjustment to the measured wind speed time series based on the correlation results

Perform a ordinary least squares correlation for multiple datasets and time periods

Import brightwind and data¶

[2]:

# import the brightwind library

import brightwind as bw

[3]:

# specify the directory of the demo dataset

filepath = r'C:\Users\Stephen\Documents\Analysis\demo_data.csv'

# load the raw data

data = bw.load_csv(filepath)

# apply cleaning to the raw data

data = bw.apply_cleaning(data, r'C:\Users\Stephen\Documents\Analysis\demo_cleaning_file.csv')

# show the first 5 rows of the cleaned data

data.head(5)

Cleaning applied. (Please remember to assign the cleaned returned DataFrame to a variable.)

[3]:

| Spd80mN | Spd80mS | Spd60mN | Spd60mS | Spd40mN | Spd40mS | Spd80mNStd | Spd80mSStd | Spd60mNStd | Spd60mSStd | ... | Dir78mSStd | Dir58mS | Dir58mSStd | Dir38mS | Dir38mSStd | T2m | RH2m | P2m | PrcpTot | BattMin | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Timestamp | |||||||||||||||||||||

| 2016-01-09 15:30:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 15:40:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:00:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:10:00 | 7.382 | 7.325 | 6.818 | 6.689 | 6.252 | 6.174 | 0.844 | 0.810 | 0.897 | 0.875 | ... | 4.680 | 118.8 | 5.107 | 115.6 | 5.189 | 0.954 | 100.0 | 934.0 | 0.0 | 12.71 |

| 2016-01-09 17:20:00 | 7.977 | 7.791 | 8.110 | 7.915 | 8.140 | 7.974 | 0.556 | 0.528 | 0.562 | 0.524 | ... | 3.123 | 115.9 | 2.960 | 113.6 | 3.540 | 0.863 | 100.0 | 934.0 | 0.0 | 12.69 |

5 rows × 29 columns

Import reference datasets¶

For this example, we will import 4 MERRA-2 reanalysis datasets. These can be found as sample datasets within the brightwind library. We use the load_csv() function to load in each dataset from a csv file.

[4]:

# load each of the 4 reanalysis datasets to its own dataframe

Merra2_NE = bw.load_csv(r'C:\Users\Stephen\Documents\Analysis\MERRA-2_NE_2000-01-01_2017-06-30.csv')

Merra2_NW = bw.load_csv(r'C:\Users\Stephen\Documents\Analysis\MERRA-2_NW_2000-01-01_2017-06-30.csv')

Merra2_SE = bw.load_csv(r'C:\Users\Stephen\Documents\Analysis\MERRA-2_SE_2000-01-01_2017-06-30.csv')

Merra2_SW = bw.load_csv(r'C:\Users\Stephen\Documents\Analysis\MERRA-2_SW_2000-01-01_2017-06-30.csv')

# show the first 5 lines of NE dataset

Merra2_NE.head(5)

[4]:

| WS50m_m/s | WD50m_deg | T2M_degC | PS_hPa | |

|---|---|---|---|---|

| DateTime | ||||

| 2000-01-01 00:00:00 | 6.840 | 275 | 4.06 | 997.17 |

| 2000-01-01 01:00:00 | 6.506 | 264 | 3.44 | 997.64 |

| 2000-01-01 02:00:00 | 7.095 | 254 | 3.12 | 998.39 |

| 2000-01-01 03:00:00 | 8.088 | 246 | 3.19 | 999.12 |

| 2000-01-01 04:00:00 | 8.386 | 239 | 3.18 | 999.61 |

Correlate to 1 dataset and using 1 averaging period¶

Next we’ll perform a basic linear regression correlation using the Ordinary Least Squares method between the 80m north orientated site anemometer and the MERRA-2 dataset located to the North East. We use the ‘averaging_prd’ argument to set the averaging period for which the correlation should be carried out. This can be set to 10-min or multiples of ten minutes (10T, 30T) (depending on the interval of both datasets), hourly or multiples of 1 hour (1H, 2H, 3H, etc), daily or multiples of 1 day (1D, 2D, 3D, 15D, etc), monthly (1M) or yearly (1AS). Here we apply a monthly correlation. Finally we set the ‘coverage_threshold’, this is the minimum coverage of data within each averaging period from both the reference and site dataset that will be passed to the correlation. In this case we use a coverage threshold of 90%.

[5]:

# set up correlation

ord_lst_sq = bw.Correl.OrdinaryLeastSquares(Merra2_NE['WS50m_m/s'], data['Spd80mN'],

averaging_prd='1M', coverage_threshold=0.90)

The correlation function returns a python object. This has to be subsequently run and plotted using the commands run() and plot().

[6]:

# run correlation

ord_lst_sq.run()

{'Num data points': 16,

'offset': -0.06161432045219376,

'r2': 0.9407857754972966,

'slope': 0.9955561116923611}

The correlation has now run and the main results are returned. The slope and offset of the linear regression line are displayed along with the \(R^2\) value. These results can be returned at any time using the .show_params() command.

[7]:

#show parameters

ord_lst_sq.show_params()

{'Num data points': 16,

'offset': -0.06161432045219376,

'r2': 0.9407857754972966,

'slope': 0.9955561116923611}

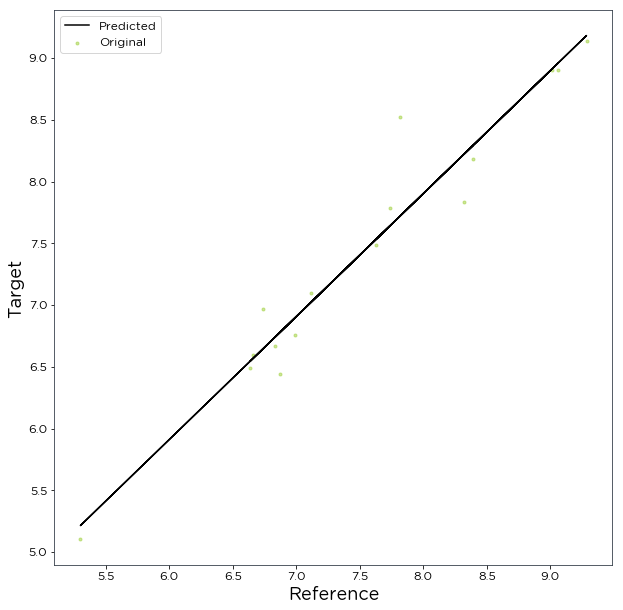

To show the correlation plot along with the linear regression line we simply input:

[8]:

# show plot

ord_lst_sq.plot()

[8]:

Now that we have a correlation we will want to adjust our data accordingly. We can synthesize monthly Spd80mN mean wind speeds from the Merra2_NE data using the synthesize() method. This will synthesize monthly means for months that Spd80mN doesn’t cover and splices it with months Spd80mN does cover.

[9]:

# synthesize monthly means over the long term record

LT_monthly_means = ord_lst_sq.synthesize()

# Show the first 5 values

LT_monthly_means.head(5)

[9]:

| Spd80mN_Synthesized | |

|---|---|

| Timestamp | |

| 2000-01-01 | 9.300975 |

| 2000-02-01 | 11.099150 |

| 2000-03-01 | 7.999968 |

| 2000-04-01 | 6.842368 |

| 2000-05-01 | 6.191083 |

Apply a long term adjustment to the measured wind speed time series based on the correlation results¶

We can use the results of the above correlation to rescale the Spd80mN wind speed time series and put it into a long term context. First we’ll use the momm() (mean of monthly means) function to derive the long term mean of the dataset we synthesized above. This represents our target long term mean speed.

[10]:

# derive long term target mean speed

LT_target_speed = bw.momm(LT_monthly_means)

# show the value

LT_target_speed

[10]:

7.623917277960366

Next, we’ll derive a scale factor we can apply to the measurements to achieve this long term mean.

[11]:

# derive scale factor as ratio of means

LT_scale_factor = LT_target_speed/data.Spd80mN.mean()

# show the value

LT_scale_factor

[11]:

1.0140026948884429

Then, we can use the scale_wind_speed() function to rescale the measurements based on the above factor, placing them in a long term context. We’ll also allocate this a new variable within the existing dataframe.

[12]:

# scale wind speed

data['Spd80mN_LT'] = bw.scale_wind_speed(data.Spd80mN, LT_scale_factor)

# show first 5 timestamps. The new variable can be seen in the last column of the dataframe.

data.head(5)

[12]:

| Spd80mN | Spd80mS | Spd60mN | Spd60mS | Spd40mN | Spd40mS | Spd80mNStd | Spd80mSStd | Spd60mNStd | Spd60mSStd | ... | Dir58mS | Dir58mSStd | Dir38mS | Dir38mSStd | T2m | RH2m | P2m | PrcpTot | BattMin | Spd80mN_LT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Timestamp | |||||||||||||||||||||

| 2016-01-09 15:30:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 15:40:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:00:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:10:00 | 7.382 | 7.325 | 6.818 | 6.689 | 6.252 | 6.174 | 0.844 | 0.810 | 0.897 | 0.875 | ... | 118.8 | 5.107 | 115.6 | 5.189 | 0.954 | 100.0 | 934.0 | 0.0 | 12.71 | 7.485368 |

| 2016-01-09 17:20:00 | 7.977 | 7.791 | 8.110 | 7.915 | 8.140 | 7.974 | 0.556 | 0.528 | 0.562 | 0.524 | ... | 115.9 | 2.960 | 113.6 | 3.540 | 0.863 | 100.0 | 934.0 | 0.0 | 12.69 | 8.088699 |

5 rows × 30 columns

Now, let’s confirm that the mean of our new variable is equal to our long term mean speed target.

[13]:

# show ratio of mean values

data.Spd80mN_LT.mean()/LT_target_speed

[13]:

1.0000000000000002

The resulting variable can then be used along with concurrent wind direction data (such as ‘data.Dir78mS’) to generate a tab file for wind flow modelling as shown in the tutorial for exporting a tab file.

Correlation for multiple datasets and time periods¶

Note: in a future release we plan to create a more user-friendly wrapper to perform multiple correlations at once.

As well as performing one correlation with specific parameters, the brightwind library can be used to correlate to multiple reference datasets using multiple averaging periods in one cell. The code below performs two ‘for’ loops to iterate through each of the MERRA-2 datasets and a number of averaging periods, and returns the results in a dataframe. In order to allocate the results as a dataframe, we need to first import the pandas library.

[14]:

import pandas as pd

We then assign each of the datasets to a python dictionary, so we can easily loop through them later.

[15]:

merra2_nodes = {

'M2_NE': Merra2_NE,

'M2_NW': Merra2_NW,

'M2_SE': Merra2_SE,

'M2_SW': Merra2_SW

}

The following code can then be used to run each for loop and add results to the new dataframe. Specific steps are explained in the comments throughout.

[16]:

# set the target and reference column names

target_column = 'Spd80mN'

ref_column = 'WS50m_m/s'

# create a list to store all of the results

correl_results = []

# set up first loop, which will step through the 4 reanalysis datasets

for node in merra2_nodes:

# set up the second loop, nested within the first one. This one will step through a number of averaging periods.

for avg_prd in ['1D','3D','7D','15D','1M']:

# set up the correlation object, as done previously

ord_lst_sq = bw.Correl.OrdinaryLeastSquares(merra2_nodes[node][ref_column], data[target_column],

averaging_prd=avg_prd, coverage_threshold=0.90)

# run the correlation

ord_lst_sq.run()

# Append the results to the results list

correl_results.append({

'1, Reanalysis dataset': node,

'2, Averaging Period': avg_prd,

'3, Num data points': ord_lst_sq.params['Num data points'],

'4, LT Reference MOMM': bw.momm(merra2_nodes[node][ref_column]),

'5, R^2': ord_lst_sq.params['r2'],

'6, Slope': ord_lst_sq.params['slope'],

'7, Offset': ord_lst_sq.params['offset'],

'8, LT Target wind speed': bw.momm(ord_lst_sq.synthesize()),

'9, Adjustment of mean [%]': ((bw.momm(ord_lst_sq.synthesize()) / data[target_column].mean())-1)*100,

})

{'Num data points': 509,

'offset': -0.42487844744002934,

'r2': 0.8950111609239269,

'slope': 1.040347744890449}

{'Num data points': 166,

'offset': -0.6361907996697532,

'r2': 0.9347172373809141,

'slope': 1.065506067469903}

{'Num data points': 71,

'offset': -0.5882020865023719,

'r2': 0.9340706234081555,

'slope': 1.0608473791176414}

{'Num data points': 34,

'offset': -0.3469504509964136,

'r2': 0.9580669877674568,

'slope': 1.0312563688477243}

{'Num data points': 16,

'offset': -0.06161432045219376,

'r2': 0.9407857754972966,

'slope': 0.9955561116923611}

{'Num data points': 509,

'offset': -0.09566950948358079,

'r2': 0.8467424753668483,

'slope': 0.9513356833362564}

{'Num data points': 166,

'offset': -0.3094075956208962,

'r2': 0.8958363060049799,

'slope': 0.9763981012985756}

{'Num data points': 71,

'offset': -0.2654084062535991,

'r2': 0.890799351069137,

'slope': 0.9719866140592953}

{'Num data points': 34,

'offset': 0.15682108376560136,

'r2': 0.9206266548035889,

'slope': 0.9202250143158889}

{'Num data points': 16,

'offset': 0.6453320138995284,

'r2': 0.8772421506713155,

'slope': 0.860974299364037}

{'Num data points': 509,

'offset': -0.16478996565523255,

'r2': 0.8456645998836172,

'slope': 0.9676742260535489}

{'Num data points': 166,

'offset': -0.38866696097764375,

'r2': 0.8861973972237285,

'slope': 0.9939478232735908}

{'Num data points': 71,

'offset': -0.3904784183852624,

'r2': 0.8993332053612523,

'slope': 0.9970145362591194}

{'Num data points': 34,

'offset': -0.13824125155034708,

'r2': 0.9311687736097964,

'slope': 0.9642528458509261}

{'Num data points': 16,

'offset': 0.21439627305515263,

'r2': 0.9162048374395771,

'slope': 0.9216301350074104}

{'Num data points': 509,

'offset': 0.12359784304781836,

'r2': 0.7800512366998469,

'slope': 0.897860529255207}

{'Num data points': 166,

'offset': -0.13024511222955648,

'r2': 0.8299573609509825,

'slope': 0.9274217939487325}

{'Num data points': 71,

'offset': -0.17839001354473732,

'r2': 0.8392903588116132,

'slope': 0.9348187140549352}

{'Num data points': 34,

'offset': 0.17384790241582168,

'r2': 0.8834584307562071,

'slope': 0.8907949268795059}

{'Num data points': 16,

'offset': 0.8554505335265273,

'r2': 0.8414235670295263,

'slope': 0.8092854017974048}

Each set of print results above are the result of the obj.run() function. We have saved a summary of the results under ‘correl_results’, so lets take a look at that now.

[17]:

# put the list into a DataFrame for ease of analysis.

correl_results_df = pd.DataFrame(correl_results)

correl_results_df

[17]:

| 1, Reanalysis dataset | 2, Averaging Period | 3, Num data points | 4, LT Reference MOMM | 5, R^2 | 6, Slope | 7, Offset | 8, LT Target wind speed | 9, Adjustment of mean [%] | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | M2_NE | 1D | 509 | 7.707068 | 0.895011 | 1.040348 | -0.424878 | 7.601000 | 1.095465 |

| 1 | M2_NE | 3D | 166 | 7.707068 | 0.934717 | 1.065506 | -0.636191 | 7.576759 | 0.773044 |

| 2 | M2_NE | 7D | 71 | 7.707068 | 0.934071 | 1.060847 | -0.588202 | 7.596172 | 1.031245 |

| 3 | M2_NE | 15D | 34 | 7.707068 | 0.958067 | 1.031256 | -0.346950 | 7.600418 | 1.087716 |

| 4 | M2_NE | 1M | 16 | 7.707068 | 0.940786 | 0.995556 | -0.061614 | 7.623917 | 1.400269 |

| 5 | M2_NW | 1D | 509 | 8.114629 | 0.846742 | 0.951336 | -0.095670 | 7.630865 | 1.492676 |

| 6 | M2_NW | 3D | 166 | 8.114629 | 0.895836 | 0.976398 | -0.309408 | 7.612278 | 1.245460 |

| 7 | M2_NW | 7D | 71 | 8.114629 | 0.890799 | 0.971987 | -0.265408 | 7.629251 | 1.471205 |

| 8 | M2_NW | 15D | 34 | 8.114629 | 0.920627 | 0.920225 | 0.156821 | 7.624570 | 1.408951 |

| 9 | M2_NW | 1M | 16 | 8.114629 | 0.877242 | 0.860974 | 0.645332 | 7.643720 | 1.663650 |

| 10 | M2_SE | 1D | 509 | 8.087812 | 0.845665 | 0.967674 | -0.164790 | 7.666124 | 1.961635 |

| 11 | M2_SE | 3D | 166 | 8.087812 | 0.886197 | 0.993948 | -0.388667 | 7.645255 | 1.684067 |

| 12 | M2_SE | 7D | 71 | 8.087812 | 0.899333 | 0.997015 | -0.390478 | 7.676901 | 2.104962 |

| 13 | M2_SE | 15D | 34 | 8.087812 | 0.931169 | 0.964253 | -0.138241 | 7.656873 | 1.838585 |

| 14 | M2_SE | 1M | 16 | 8.087812 | 0.916205 | 0.921630 | 0.214396 | 7.678354 | 2.124289 |

| 15 | M2_SW | 1D | 509 | 8.409316 | 0.780051 | 0.897861 | 0.123598 | 7.677981 | 2.119335 |

| 16 | M2_SW | 3D | 166 | 8.409316 | 0.829957 | 0.927422 | -0.130245 | 7.662884 | 1.918537 |

| 17 | M2_SW | 7D | 71 | 8.409316 | 0.839290 | 0.934819 | -0.178390 | 7.686714 | 2.235478 |

| 18 | M2_SW | 15D | 34 | 8.409316 | 0.883458 | 0.890795 | 0.173848 | 7.664287 | 1.937200 |

| 19 | M2_SW | 1M | 16 | 8.409316 | 0.841424 | 0.809285 | 0.855451 | 7.671619 | 2.034711 |

We can easily scan down through the results to see the different \(R^2\) values for different averaging periods and reanalysis datasets, and see other key metrics such as the predicted long term wind speed and the percentage adjustment from the measured site wind speed to the long term wind speed.

Now lets scale the wind speed once again, this time based on the correlation with the highest \(R^2\) value in our table. First, let’s show all the parameters of the correlation with the max \(R^2\).

[18]:

# show correlation results when r2 is equal to the max r2

correl_results_df[correl_results_df['5, R^2'] == max(correl_results_df['5, R^2'])]

[18]:

| 1, Reanalysis dataset | 2, Averaging Period | 3, Num data points | 4, LT Reference MOMM | 5, R^2 | 6, Slope | 7, Offset | 8, LT Target wind speed | 9, Adjustment of mean [%] | |

|---|---|---|---|---|---|---|---|---|---|

| 3 | M2_NE | 15D | 34 | 7.707068 | 0.958067 | 1.031256 | -0.34695 | 7.600418 | 1.087716 |

We can pull out the index of this correlation from our table by adding on .index[0] on the end amd assigning it to a variable.

[19]:

# get row with the best correlation

row = correl_results_df[correl_results_df['5, R^2'] == max(correl_results_df['5, R^2'])].index[0]

# show index

row

[19]:

3

Then we can refer to the long term target speed from this correlation as follows.

[20]:

correl_results_df['8, LT Target wind speed'][row]

[20]:

7.600417545842798

This can be used to derive a scale factor in the same way we did before.

[21]:

# derive scale factor as ratio of means

LT_scale_factor = correl_results_df['8, LT Target wind speed'][row] / data[target_column].mean()

# show the value

LT_scale_factor

[21]:

1.010877163638877

Finally, we can create a second LT adjusted time series using the same commands as before, once again add this to our existing DataFrame alongside the previous long term variable.

[22]:

# scale wind speed

data['Spd80mN_LT_2'] = bw.scale_wind_speed(data[target_column], LT_scale_factor)

# show first 5 timestamps. The new variable can be seen to the right of the dataframe.

data.head(5)

[22]:

| Spd80mN | Spd80mS | Spd60mN | Spd60mS | Spd40mN | Spd40mS | Spd80mNStd | Spd80mSStd | Spd60mNStd | Spd60mSStd | ... | Dir58mSStd | Dir38mS | Dir38mSStd | T2m | RH2m | P2m | PrcpTot | BattMin | Spd80mN_LT | Spd80mN_LT_2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Timestamp | |||||||||||||||||||||

| 2016-01-09 15:30:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 15:40:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:00:00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2016-01-09 17:10:00 | 7.382 | 7.325 | 6.818 | 6.689 | 6.252 | 6.174 | 0.844 | 0.810 | 0.897 | 0.875 | ... | 5.107 | 115.6 | 5.189 | 0.954 | 100.0 | 934.0 | 0.0 | 12.71 | 7.485368 | 7.462295 |

| 2016-01-09 17:20:00 | 7.977 | 7.791 | 8.110 | 7.915 | 8.140 | 7.974 | 0.556 | 0.528 | 0.562 | 0.524 | ... | 2.960 | 113.6 | 3.540 | 0.863 | 100.0 | 934.0 | 0.0 | 12.69 | 8.088699 | 8.063767 |

5 rows × 31 columns

Of course, \(R^2\) is not the only parameter than matters when deciding on a robust correlation method, so the analyst still has an important role! Nonetheless, this shows how easy the library makes it to compare large numbers of reference datasets and get back useful results fast. Upcoming updates to the library will aim to reduce the code required to perform the looping procedure, so watch this space!

Other correlation methods¶

The library also includes a host of other correlation functions such as:

SimpleSpeedRatio()OrthogonalLeastSquares()MultipleLinearRegression()Speedsort()SVR()

The SimpleSpeedRatio() is simply a ratio of the overlapping target and reference wind speed time series.

The OrthogonalLeastSquares() function carries out a orthogonal least squares regression correlation.

The MultipleLinearRegression() function allows linear regression to be carried out between multiple reference datasets and the site data.

The Speedsort() function is a directional correlation method developed by Brian Hurley and Ciaran King as outlined in the paper “The Speedsort, DynaSort and Scatter wind correlation methods”, Wind Engineering Volume 29, No.3, pp 217-241.

The SVR() function is a support vector machine correlation method which is a type of Machine Learning alogorithim.

These functions will be the subject of future tutorials relating to correlation methods.